The lives of digital platform users are at odds with how these systems are conceived and built. Weaponised design – a process that allows for harm of users within the defined bounds of a designed system – is facilitated by practitioners who are oblivious to the politics of digital infrastructure or consider their design practice output to be apolitical. Although users find themselves subject to traumatic events with increasing regularity, weaponised design is yet to be addressed by the multi-faceted field of interface and infrastructure design.

“When people fail to follow these bizarre, secret rules, and the machine does the wrong thing, its operators are blamed for not understanding the machine, for not following its rigid specifications. With everyday objects, the result is frustration. With complex devices and commercial and industrial processes, the resulting difficulties can lead to accidents, injuries, and even deaths. It is time to reverse the situation: to cast the blame upon the machines and their design. It is the machine and its design that are at fault. It is the duty of machines and those who design them to understand people. It is not our duty to understand the arbitrary, meaningless dictates of machines.”[1]

User Experience Design has blossomed from a niche industry in the halls of Silicon Valley’s early startup darlings to a force that architects our digital lives. Today, user experience design is wholly responsible for modelling human expression and self identity, enabling interaction and participation online. Design shares as much in common with information security research as it does with behavioural science or aesthetics. By failing to criticise common design practices or form cooperative relationships with other technology fields, user experience designers are effectively refusing to recognise and respond to traumatic cases of its own work being used to harm the users it claims to serve.

Every major technology company has grappled with examples of their platform being leveraged to maliciously harm users whilst performing entirely within expected behaviour. This is weaponised design – electronic systems whose designs either do not account for abusive application or whose user experiences directly empower attackers.

Examples of weaponised design are numerous and horrific: Using a smartphone, a technology reporter uncovers the home address of a friend by interacting with a new Snapchat feature that broadcasts her location on a map each time she posts[2]. After viewing her friend’s posts, the journalist spends a few moments pinpointing her apartment via Google Maps street view and asks her subject to confirm the address. Her friend is ‘creeped out’ as she realises that, wholly unexpectedly, her phone has violated her privacy and her trust by exposing her home address to all of her Snapchat followers without her consent. The experience makes international headlines and authorities issue another warning over the app’s potential for targeted misuse.

Meanwhile, in an effort to address targeted harassment of users, the design team at Twitter announces an adjustment[3] that silences notifications sent to trolling victims when they are added to malicious Twitter lists. The outcry is immediate[4]; Removing list notifications allows harassers to compile and share lists of targets undetected. Victims are unaware they are shared targets and can’t fight back. The violation is so obvious the feature is reversed within hours[5]. Harassment across Twitter continues.

Elsewhere, two Facebook designers write about the data-driven tweaks their team has made to Newsfeed. They conclude that the changes make Facebook meaningfully easier to use, engage with and navigate, but fail to discuss the ethical or sociological implications[6] of their work, which researchers consider significant[7]. The article proves popular with the design community. A month later, the US Government enters the company’s Menlo Park offices with a warrant[8], looking for evidence of Facebook’s ability to exert major influence on voting behaviour through psychology research enabled by interface and systems design. These examples happened in 2017 alone and are all dramatic scenarios of weaponised design.

The most common way design is weaponised is through poorly considered tradeoffs, where the ideal user is prioritised over empathetic threat modelling, resulting in features that are at high risk of exploitation. Alongside this year’s Snapchat example, another example of this famously occurred in 2014 when attackers accessed automatic camera roll cloud backups and leaked of intimate photos of mostly female victims from Apple servers[9]. In that case, the critical key focus is not of the actions of attackers, but rather one of informed consent. One can assume Apple’s designers took a puritan, anti-sex approach to the problem of centralised photo backup: Users don’t sext, and if they do, they wouldn’t object to intimate personal photos being synced to the cloud. As the company transferred millions of users into an opt-out automatic backup service, they failed to articulate the personal implications to their user base.

Design can also be weaponised through team apathy or inertia, where user feedback is ignored or invalidated by an arrogant, culturally homogenous or inexperienced team designing a platform. This is a notable criticism of Twitter’s product team, whose perceived lack of design-led response is seen as a core factor for enabling targeted, serious harassment of women[10] by #Gamergate[11], from at least 2014 to present day.

Finally, design can be directly weaponised by the design team itself. Examples of this include Facebook’s designers conducting secret and non-consensual experiments on voter behaviour in 2012–2016, and emotional states of users in 2012[12], and Target, who in 2014 through surveillance ad tech and careful communications design, informed a father of his daughter’s unannounced pregnancy[13]. In these examples, designers collaborate with other teams within an organisation, facilitating problematic outcomes whose impact scale exponentially in correlation with the quality of the design input.

These three situations have one thing in common: They are the result of designers failing their users through designed systems that behave more or less as the user expected. This is a decade long misrepresentation of the relationship and responsibilities of designers to their users, a dangerous lack of professionalism, ethics and self-regulation, along with a lack of understanding of how multi-disciplinary design is leveraged to both exploit and harm the community. As appointed user advocates, design is yet to embrace the new tools and practices to continue working in an increasingly user-hostile digital world.

Corrupting Design

In 1993, Apple Computer’s Human Computer Interface research team hired cognitive psychologist Donald Norman to work with the company’s early-stage interaction team. While they were not the first to argue for the need for so-called human-centric approaches to interface design[14], Norman’s role as a ‘User Experience Architect’ marked a subtle but fundamental shift in the practice behind the design of commodified personal computer systems. By drawing from mid-twentieth century capitalist practice of product design and applying psychology and behavioural science to information architecture, the foundations for user-centric software design were laid, contributing to the company’s early successes in the West.

Today, user experience design no longer requires a sociological or behavioural science background, but these origins linger. The field encompasses everything from aesthetics, visual communication and branding to deep system and information architecture, all working in concert to define and anticipate the activities of a user through applied user research. As platforms became more commodified – especially through mobile touch mediums – UX designers have progressively become more reliant on existing work, creating a feedback loop that promotes playfulness, obviousness and assumed trust at the expense of user safety.

The focus on details and delight can be traced not just from the history of the field, but to manifestos like Steve Krug’s Don’t Make Me Think[15], which propose a dogmatic adherence to cognitive obviousness and celebrates frictionless interaction as the ultimate design accomplishment. Users should never have to question an interface. Instead, designers should anticipate and cater to their needs: “It doesn’t matter how many times I have to click, as long as each click is a mindless, unambiguous choice.”

A “mindless, unambiguous choice” is not without cultural, social and political context. In Universal UX Design: Building Multicultural User Experience, Alberto Ferreira explores the futility of ‘universal design,’ where in a globalised world, the expression of design varies wildly[16]. Amongst many cross-cultural examples, his most striking are the failings of Western designers as they adapt their work to users in China or Japan. These hyper-connected middle-class audiences share comparable statuses for wealth, connectivity and education. But the cultural and aesthetic variances between these three societies are pronounced. Designers who produce or adapt work for these populations without a sturdy conceptual framework repeatedly fail their user. ‘Mindless and unambiguous’ is only true for those who have both the cultural context to effortlessly decode an interface, and the confidence that their comprehension is solid. Not only is this dogma an unreasonable constraint, it also frequently fails.

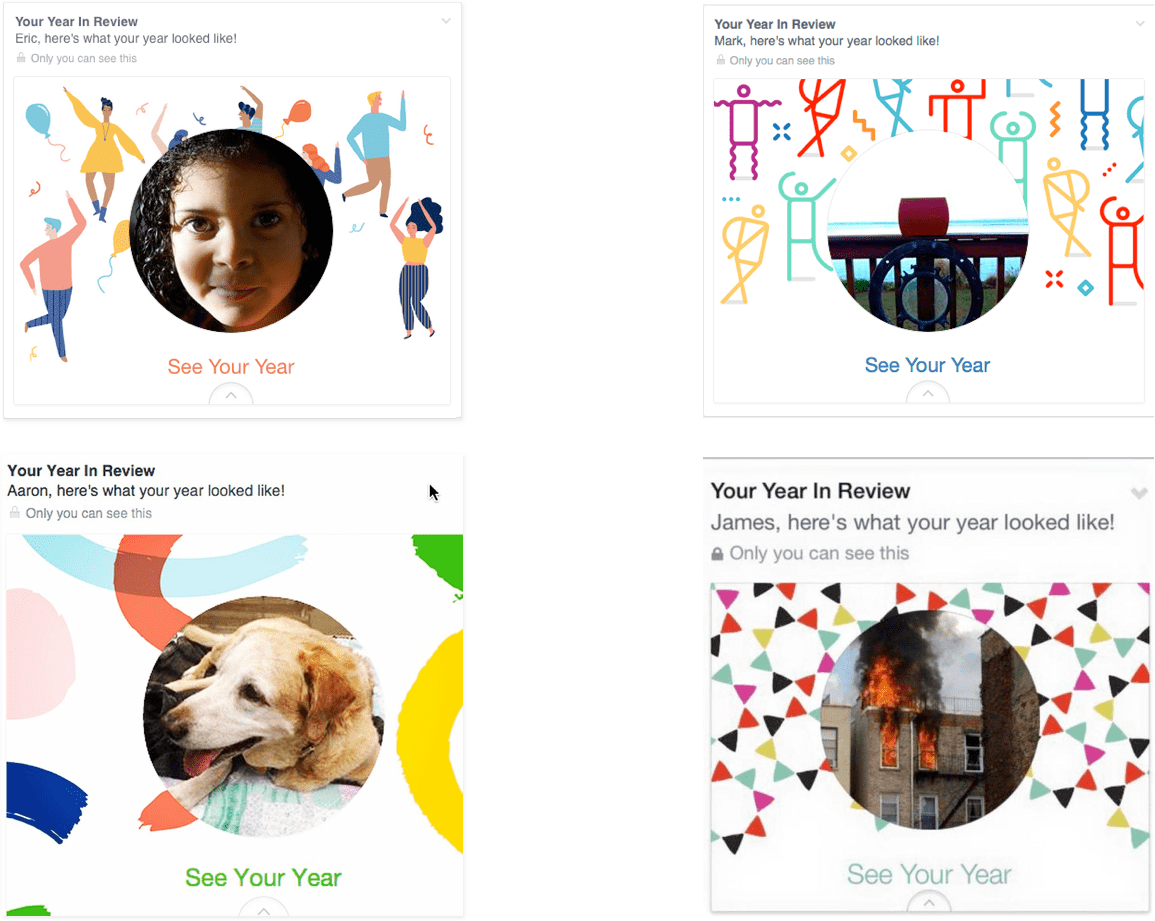

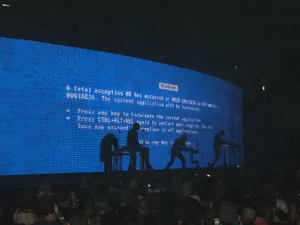

In 2014, Eric Meyer, an influential web developer, was shown a celebratory algorithmically- generated “Facebook Year in Review” featuring images of his daughter who had died from cancer in that same year. He wrote:

“Algorithms are essentially thoughtless. They model certain decision flows, but once you run them, no more thought occurs. To call a person “thoughtless” is usually considered a slight, or an outright insult; and yet, we unleash so many literally thoughtless processes on our users, on our lives, on ourselves. […] In creating this Year in Review app, there wasn’t enough thought given to cases like mine or anyone who had a bad year. The design is for the ideal user, the happy, upbeat, good-life user. It doesn’t take other use cases into account.”[18]

That the “distinguished” design team at Facebook chose to deploy an application capable of such an insensitive intrusion betrays a lack of diverse life experiences within its ranks, but this is somehow a tame example of the homogenous foundations of user experience design. At its extreme, Twitter is often singled out for its initial ideal user design, and through designing with such optimism, it has become optimised for abuse – “a honeypot for assholes”[19]. Now that the platform is an incumbent in the social media landscape, investor demand for user attention – measured as ‘platform stickiness’ – modifying the weaponised effects of homogenous ideal design has created business incentives to prevent the implementation of subsequent protections. In direct contradiction to public claims by the company’s leadership, former and current[20] Twitter employees describe a organisation-wide disinterest in creating new models to moderate abuse on their platform or even deploy already-developed solutions.

Today’s designers continue to maintain the status quo without industry or external criticism or pressure. Whereas other industries have ethical review boards or independent investigative bodies, popular design is apathetic to harm reduction or political discourse[21]. Because of this, the industry is yet to fully examine its participation to the current techno-political climate. In 2017 – as wealthy democracies destabilise and technology is embraced in conflict-stricken societies – the ethical and empathetic failings of user experience design harms users with increasing regularity and intensity. As design has become commodified and weaponised by both platform operators and attackers, the response from designers has largely been to arrange chairs on the Titanic.

Responding to a user-hostile world

Design is inherently political, but it is not inherently good. With few exceptions, the motivations of a design project are constrained by the encompassing platform or system first, and the experiences and values of its designers second. The result is designers working in a user hostile world, where even seemingly harmless platforms or features are exploited for state or interpersonal surveillance and violence.

As people living in societies, we cannot be separated from our political contexts. However, design practitioners research and implement systems based on a process of abstracting their audience through user stories. A user story is “a very high-level definition of a requirement, containing just enough information so that the developers can produce a reasonable estimate of the effort to implement it.”[22] In most cases, user are grouped through shared financial or biographical data, by their chosen devices, or by their technical or cognitive abilities.

When designing for the digital world, user stories ultimately determine what is or is not an acceptable area of human variation. The practice empowers designers and engineers to communicate via a common problem-focused language. But practicing design that views users through a politically-naive lens leaves practitioners blind to the potential weaponisation of their design. User-storied design abstracts an individual user from a person of rich social and political agency to a collection of designer-defined generalisations. In this approach, their political and interpersonal experiences are also generalised or discarded, creating a shaky foundation that allows for assumptions to form from the biases of the design team. This is at odds with the personal affairs of each user, and the complex interpersonal interactions that occur within a designed digital platform.

When a design transitions from theoretical to tangible, individual user problems and motivations become part of a larger interpersonal and highly political human network, affecting communities in ways that we do not yet fully understand. In Infrastructural Games and Societal Play, Eleanor Saitta writes of the rolling anticipated and unanticipated consequences of systems design: “All intentionally-created systems have a set of things the designers consider part of the scope of what the system manages, but any nontrivial system has a broader set of impacts. Often, emergence takes the form of externalities — changes that impact people or domains beyond the designed scope of the system.”[23] These are no doubt challenges in an empathetically designed system, but in the context of design homogeny, these problems cascade.

In a talk entitled From User Focus to Participation Design, Andie Nordgren advocates for how participatory design is a step to developing empathy for users:

“If we can’t get beyond ourselves and our [platforms] – even if we are thinking about the users – it’s hard to transfer our focus to where we actually need to be when designing for participation which is with the people in relation to each other.”[24]

Through inclusion, participatory design extends a design team’s focus beyond the hypothetical or ideal user, considering the interactions between users and other stakeholders over user stories. When implemented with the aim of engaging a diverse range of users during a project, participatory design becomes more political by forcing teams to address weaponised design opportunities during all stages of the process.

Beyond better design paradigms, designers must look beyond the field, toward practices that directly criticise or oppose their work. In particular, security research and user experience design have significant practice and goal overlap and this relationship is often antagonistic. Both fields primarily focus on the systems of wide-scale interactions between users and technology, but the goals of the two fields are diametrically opposed; design is to create the best possible experience for a user, security is to create the worst possible experience for an attacker. By focusing on the outcomes of the two fields, it’s clear that security research is a form of user experience design. Design should reciprocate, and become a form of security research.

At-risk users themselves are educating each other in operational security and threat modelling techniques. Robert Gehl describes how, increasingly, the threats faced by dark web communities mirror those experienced by the general public, acting as predictors for the future of the internet. In his essay, Proactive Paranoia, he writes:

Proactive paranoia was forged in the toxic cultural contexts of increasingly militarized states, ubiquitous surveillance, global neoliberalism, and an all-out hustle for online money. In other words, OPSEC politics need not be limited to the dark web. In a world of arrests, scams, fines and fees, constant monitoring, and extreme caveat emptor, suspicion and paranoia are rational responses — not just in dark web markets, but in our daily lives.”[25]

There is tremendous opportunity for designers and information security researchers to cooperatively apply operational security and threat modelling practices to their work. If adopting participation design enables greater focus on interpersonal interactions within a network, then threat modelling and operational security practices offer concrete foundations for addressing human-design socio-political threats within a platform.

Countering the apolitical designer

User experience design must begin to deconstruct the outcomes of its collective body of work, especially as tech becomes more embedded and less visible or more easily ignored. Saitta writes: “All infrastructure is political; indeed, one might better say that all politics is infrastructural; we ignore it at our peril.” Developing new design practices helps to reduce cases of weaponisation through trade-offs in a system’s design, but practice alone is not enough.

Despite the many problematic elements of contemporary design, the community is somewhat capable of self-examination and cultural change. Counterintuitively, within user experience and its related fields, gender inequality seems less pronounced than the incredibly low bar set by other fields within the technology industries[26]. Self-reported research suggests that entry level roles are both well-paid and tend towards equality, with a smaller pay and ratio gap between men and women[27]. This is not to say that the industry is an inclusive field, and furthermore it is unclear whether these trends extend into senior or managerial positions.

The introduction of the Open Code of Conduct[28] is one example of industry self-reflection and regulation. Designed to hold the contributors of a project to account, the commitment of members to a minimum level of expected behaviour as defined in a code of conduct has its roots in organiser or maintainer response to incidents of harassment at conferences and in open source projects[29].

But addressing problematic internal culture of design teams is not enough. As an industry we must also confront the real-world socio-political outcomes of our practice. If we accept a code of conduct as necessary, we must also accept a code of outcomes as necessary. We must create ethical frameworks to evaluate our work at all stages, especially once it is alive in the world. Our lack of ongoing critical evaluation of our profession means that design continues to reinforce a harmful status quo, creating exploitable systems at the expense of societies.

User experience is more than just aesthetics and interfaces. It is a form of cooperative authorship and bound deeply to the infrastructure; each platform and its designers work together to represent a piece of an individual’s digital self and self expression within a wider online community. This is a responsibility on par with publishing literature that transports the reader so fully as to transform one’s self-understanding or producing cinema that ensconces the viewer so deeply that at the end there is a moment of pause to remember which understanding is reality. The online lives we collectively live are inherently mediated by technology, but how we experience this is also mediated by design.

Technology inherits the politics of its authors, but almost all technology can be harnessed in ways that transcend these frameworks. Even in its harmful and chaotic state, it is still possible to marvel at the opportunities afforded to us through design. But arrogant practice that produce brutally naive outcomes must be transcended to facilitate empathetic products and broader platforms. We must stop making decisions inside of a rose-coloured echo-chamber. As designers it is time to collectively address the political, social and human costs of weaponised design.

Cade Diehm

Winter 2018

Revised Fall 2020.

Edited by Maya Ganesh & Arikia Millikan. Special thanks to Rose Regina Lawrence, Sema Karaman, Dalia Othman, Louis Center and Ignatius Gilfedder. Originally commmissioned by Tactical Tech. Reproduced under a Creative Commons license.

- ↩︎

Snapchat’s newest feature is also its biggest privacy threat

Dani Deahl, The Verge

Jun 23 2017 ↩︎Twitter first announced the change via its own platform. Responses from prominent critics are threaded contextually with the original tweet. ↩︎

Twitter quickly kills a poorly thought out anti-abuse measure

Sarah Perez, Tech Crunch

14 February 2017 ↩︎Evolving the Facebook News Feed to Serve You Better

Shali Nguyen & Ryan Freitas, Facebook Design

15 August 2017 ↩︎Engineering the public: Big data, surveillance and computational politics

Zeynep Tufekci, First Monday

23 October 2013 ↩︎Social influence and political mobilization: Further evidence from a randomized experiment in the 2012 U.S. presidential election

Jason J. Jones et al., PLOS ONE

2012 ↩︎At time of writing, the US Justice Department had only just begun its investigation into electioneering via social media platforms and ad buys. ↩︎

#Celebgate - iCloud leaks of celebrity private photos

Wikipedia ↩︎Reporting, Reviewing, and Responding to Harassment on Twitter

J. Nathan Matias et al., Women Action Media

13 May 2015 ↩︎Sexism in the circuitry: female participation in male-dominated popular computer culture

Michael James Heron, Pauline Belford and Ayşe Göker, ACM SIGCAS Computers and Society

December 2014 ↩︎Experimental evidence of massive-scale emotional contagion through social networks

Adam D. I. Kramer, Jamie E. Guillory and Jeffrey T. Hancock, Proceedings of the National Sciences of the United States of America

2 June 2014 ↩︎How Companies Learn Your Secrets

Charles Duhigg, The New York Times

16 February 2012 ↩︎There were several other key players responsible for pioneering graphical user interfaces and user experience, including Susan Kare, Alan Kay, Larry Tesler, Robert Taylor, and many others. ↩︎

- ↩︎

Universal UX Design: Building Multicultural User Experience

Alberto Ferreira

Morgan Kaufmann

↩︎2017 Four screenshots of Facebook’s Year In Review app. Clockwise from top left: Eric Meyer’s deceased daughter, an urn containing the remains of a Facebook user’s parent, a house fire and a recently-deceased beloved pet dog. Each image in the Year in Review app is picked algorithmically and placed against a celebratory new-year themed design. ↩︎

Eric’s experience was not unique, nor was he the only person to write about his experience. Facebook’s Year in Review has generated annual criticism since it first debuted. ↩︎

“A Honeypot For Assholes”: Inside Twitter’s 10-Year Failure To Stop Harassment

Charlie Warzel, Buzzfeed

11 August 2016 ↩︎Criticism of Twitter leadership

@safree, Twitter

30 September 2017 ↩︎This 2016 collection of popular design writing is an example of the industry’s fixation on more trivial issues as larger societal problems were beginning to converge. ↩︎

See more: A deeper introduction to user stories and the Agile software development methodology. ↩︎

Infrastructural Games and Societal Play

Elanor Saitta

30 January 2016 ↩︎From User Focus To Participation Design

Andie Nordgren, Alibis for Interaction

3 October 2014 ↩︎Proactive Paranoia

Robert Gehl, Real Life Magazine

24 August 2017 ↩︎Artificial Intelligence’s White Guy Problem

Kate Crawford, The New York Times

25 June 2016 ↩︎The majority of employment and gender reporting is presented by industry bodies. In this case, the cited research was published by the User Experience Professionals Association, a US organisation. ↩︎

For an introduction to Code of Conducts within the open source software community, see the Open Source Code of Conduct guide. ↩︎

In 2015, Github adopted the Code of Conduct as an official feature and policy, influencing the further spread of the concept. ↩︎