Signalbots: Secrets Distribution and Social Graph Protection for Private Groups

Today, the security requirements for safe working can be almost indistinguishable from the requirements needed by journalists, activists or human rights defenders. With this in mind, many widely-adopted tools offer little protection from outsider abuse and targeting by competitors or ideological opponents.

What does it mean to organise, cooperate and moderate online in this panopticon era, where – as the professor Robert W. Gehl correctly identifies – the security practices of everyday people more closely mirror those needed by paranoid dark web users? It’s widely accepted as best practice that, rather than developing new security protocols and platforms, it’s better to build upon a proven stack.

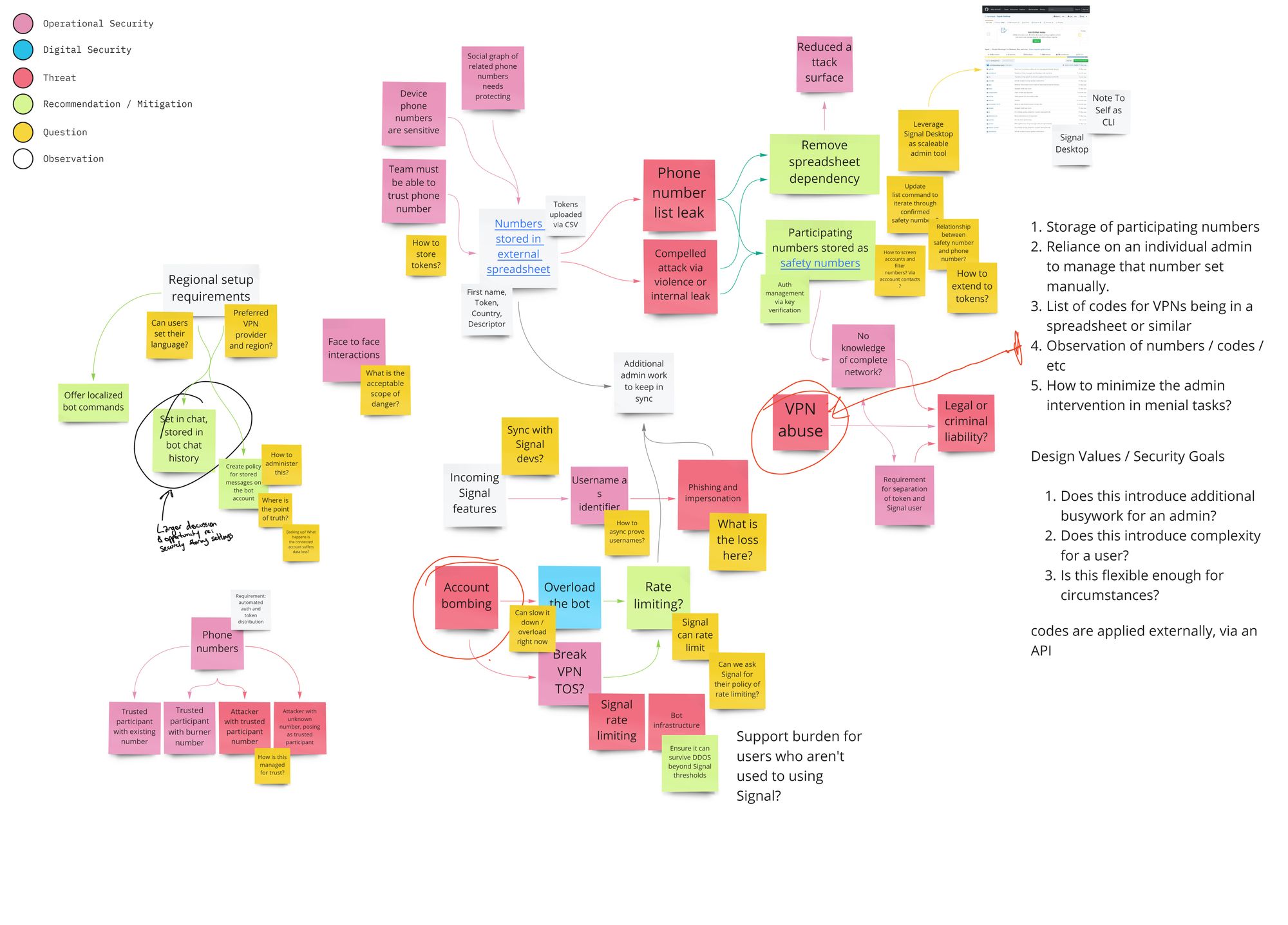

In collaboration with Superbloom Design and Throneless Tech, we conducted a multi-month deep threat modelling assessment for an innovative approach to organisational security. Using Signal - one of the most useful and widely-adopted tools for secure communication – we worked with Throneless Tech to map a user-powered chatbot infrastructure to Signal groups. This project is a ground-up system that protects the social graphs of secure Signal groups and provides IRC-like services to all participants whilst ensuring the participating group has no confusing novel overhead.

In our first test build, completed in collaboration with the Open Technology Fund and Reset Tech, these Signal Bots automate the process of distributing VPN access and other resources to people in environments with restricted Internet freedom. Our design is novel in that it leverages Signal’s end to end encryption for all parts of the bot, from bot-specific group settings to identity and trust. Unlike a traditional third-party bot, almost no data leaves the Signal network, ensuring that the bot itself can’t be attacked in order to profile involved participants. At the same time, a group’s owner can adjust levels of trust for participants and moderators, ensuring that internal bad actors or other rogue users don’t have access to the user list.

This threat modelling consultation illustrates how Signal can be deployed in novel ways outside of those officially supported by Whisper Systems. At the same time, our documentation and unique focus on socio-technical security practices allowed us to uncover and set strong guidelines for threats and risk assessment for targeted groups.

Our work was demonstrated[2] at the international digital security conference HOPE2020 by Sarah Aoun, chief technologist at Open Technology Fund, and Josh King, from Throneless Tech. This is an ongoing engagement, and from our initial work, we have collectively identified a set of opportunities for expanding these tools and apply them to a variety of organisational and community requirements.

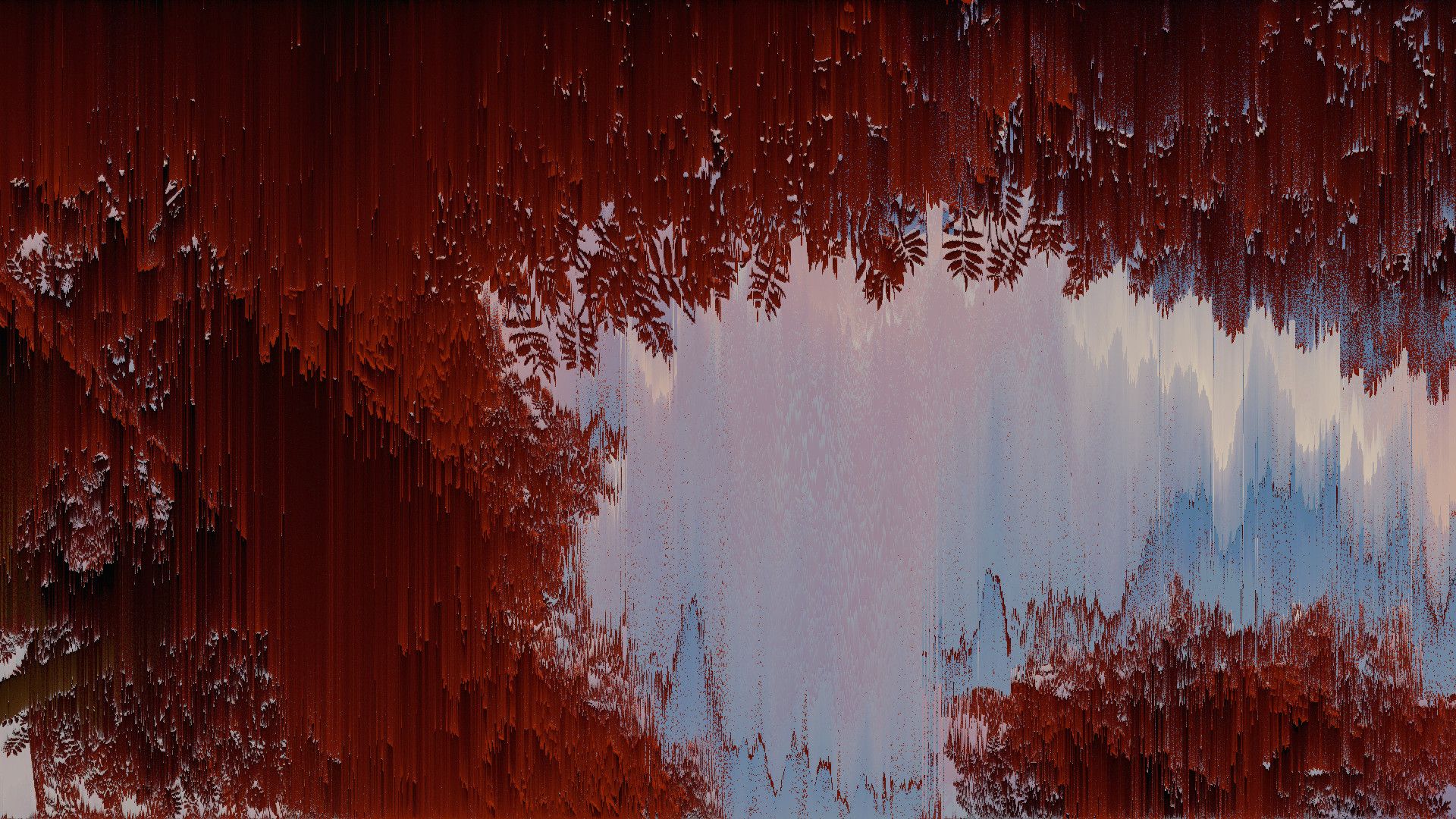

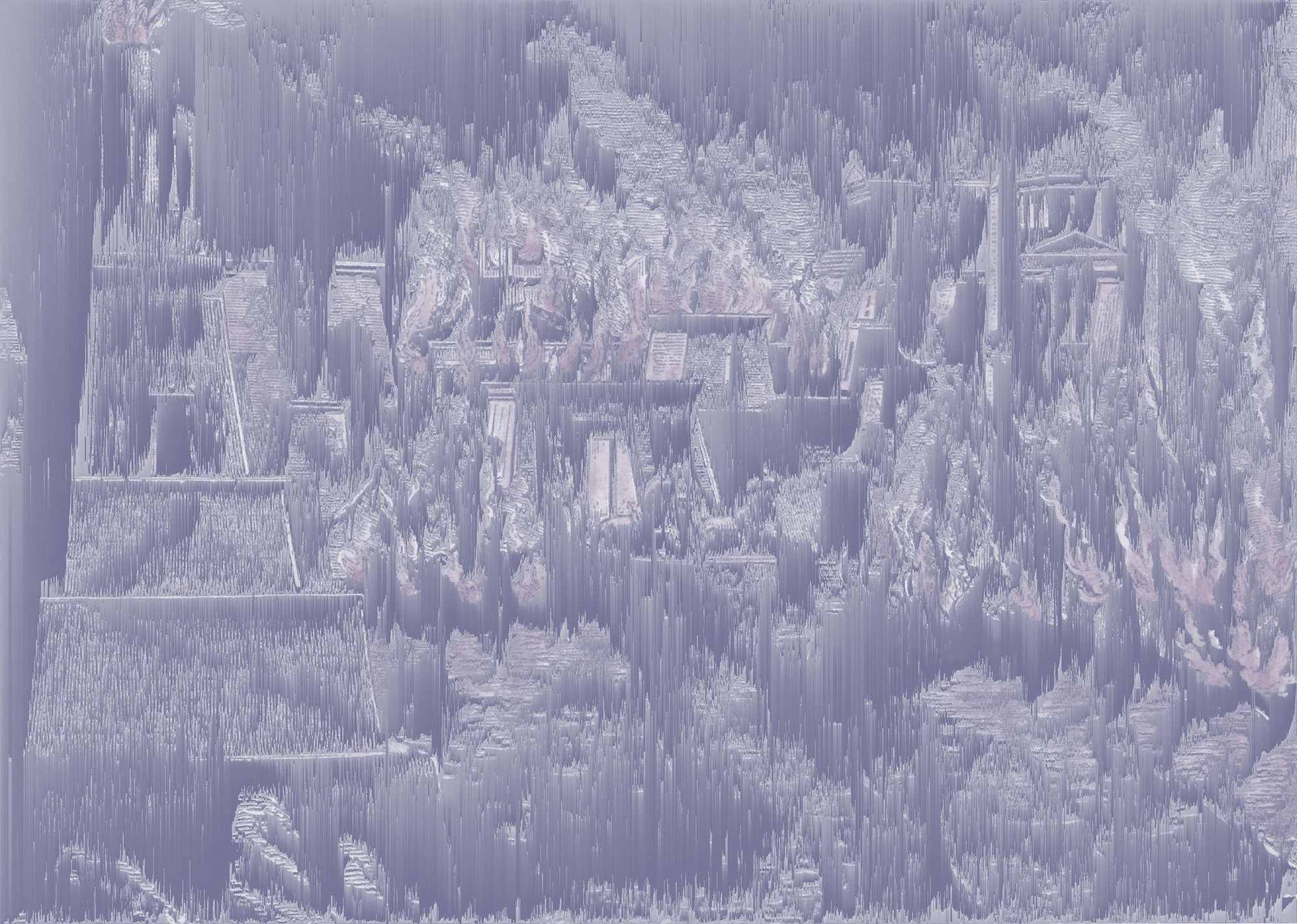

Collaborative systems and threat modelling for Signal bots, designed to assist the Throneless team anticipate rapidly changing threats to on-the-ground secure communication and resource distribution.

Click to enlarge ↩︎

↩︎ Signalbots: Secrets Distribution and Social Graph Protection for Activists

Signalbots: Secrets Distribution and Social Graph Protection for Activists

1 August 2020